Components

In this section we will describe the necessary components for this project. We have identified

the following hardware components:

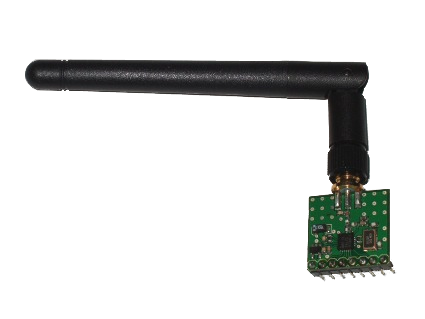

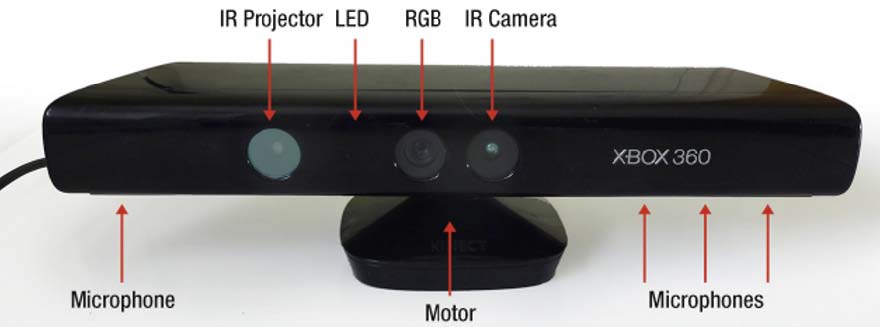

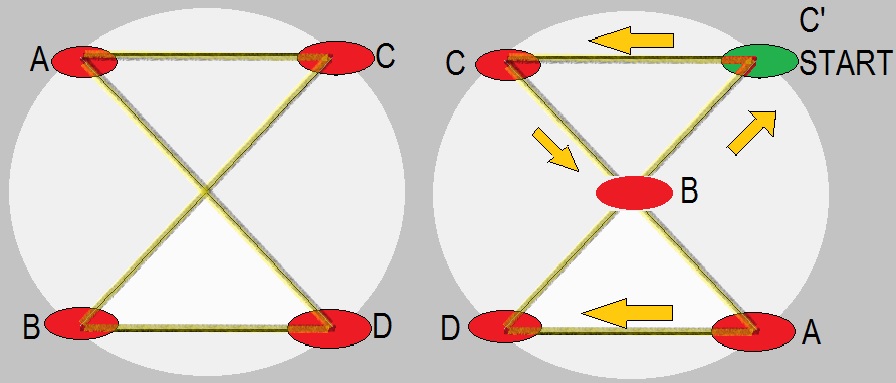

In this page, we will first list some of the hardware requirements for the two above mentioned components. The specification for some of these components can be found in Hardware Sections of Project 1 and in Project 2. We will then very briefly describe nRF25L01 radios, and Kinect, a depth camera, developed by PrimeSense and Microsoft. In the second part of this section, we will describe software requirements, e.g., the driver, and development environment for Kinect. In the final part, we will describe how these different components communicate and act together to achieve the goal of precise coordinated motion of multiple Roomba robots using a message sequence chart.

- a base-station, and

- four satellite-stations.

In this page, we will first list some of the hardware requirements for the two above mentioned components. The specification for some of these components can be found in Hardware Sections of Project 1 and in Project 2. We will then very briefly describe nRF25L01 radios, and Kinect, a depth camera, developed by PrimeSense and Microsoft. In the second part of this section, we will describe software requirements, e.g., the driver, and development environment for Kinect. In the final part, we will describe how these different components communicate and act together to achieve the goal of precise coordinated motion of multiple Roomba robots using a message sequence chart.